Summary

I have built large-scale generative systems for international art exhibitions and stadium concerts. My expertise spans end-to-end development of complex projects, from EEG brainwave translation to ML pipeline deployment, turning ambitious creative concepts into sophisticated technological experiences.

Work Experience

Generative Visual System Designer @ XCEPT, Hong Kong (On-site)

June 2024 – July 2025

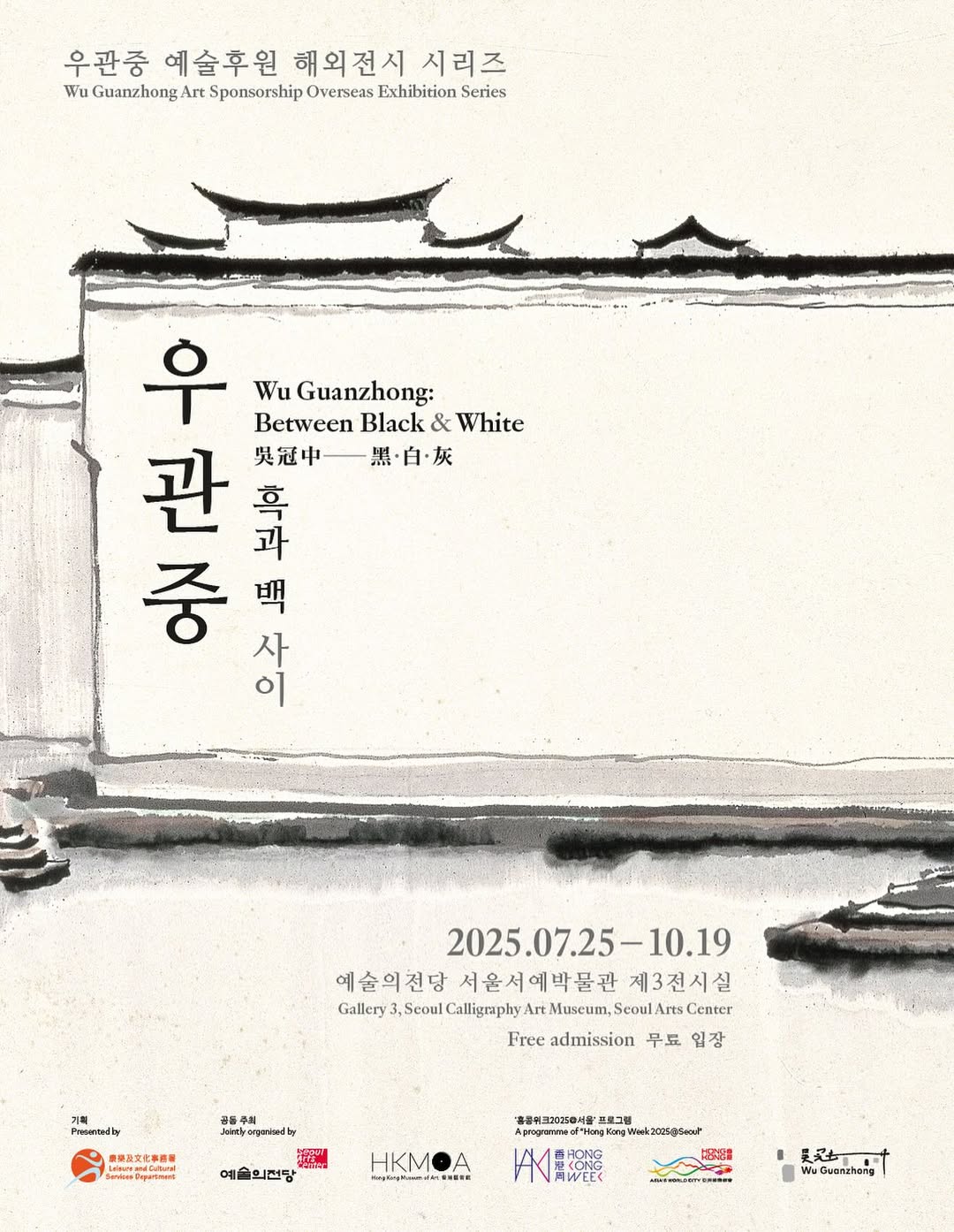

- Sentient Pond: Seoul Edition (2025): Led the end-to-end design and deployment as Lead Programmer and Machine Learning Engineer of an interactive system converting user sketches into paintings for a four-month exhibition at Seoul Arts Center and HKMOA, reaching a combined audience of 4.5+ million annual visitors. Trained a Convolutional Neural Network (Keras/TensorFlow) for stroke structure analysis and a prompt-engineered CLIP model for semantic and vector alignment across 400+ categories, including Korean, English, and Chinese handwriting. Developed a fallback-resilient multi-stage classifier; implemented contour parsing, stroke recovery, and patchwork generation via OpenCV. Built a threaded Cairo renderer to merge live input with archival sketches into adaptive layouts. Deployed Stable Diffusion with ControlNet and custom checkpoints across local and Flask-based remote servers. Designed and programmed full UI/UX in Python with Tkinter GUI; integrated OSC (TouchDesigner), automated archiving, logging, and logic preservation. Leveraged Korean fluency to proofread and edit all exhibition texts to ensure linguistic accuracy.

- After Snowfall (2024): Led the end-to-end development of a real-time visual system that integrated a lead dancer's brainwaves (Muse EEG) with 6DOF motion tracking (Vive VR controller) within Touch Designer, driving generative digital calligraphy inspired by Wang Xizhi. Designed visuals translating motion into dynamic ink strokes and particle effects. Produced projections on stage floor, sculptural backdrop, and translucent scrim, refined through iterative collaboration with dancers and choreographer to align with artistic vision.

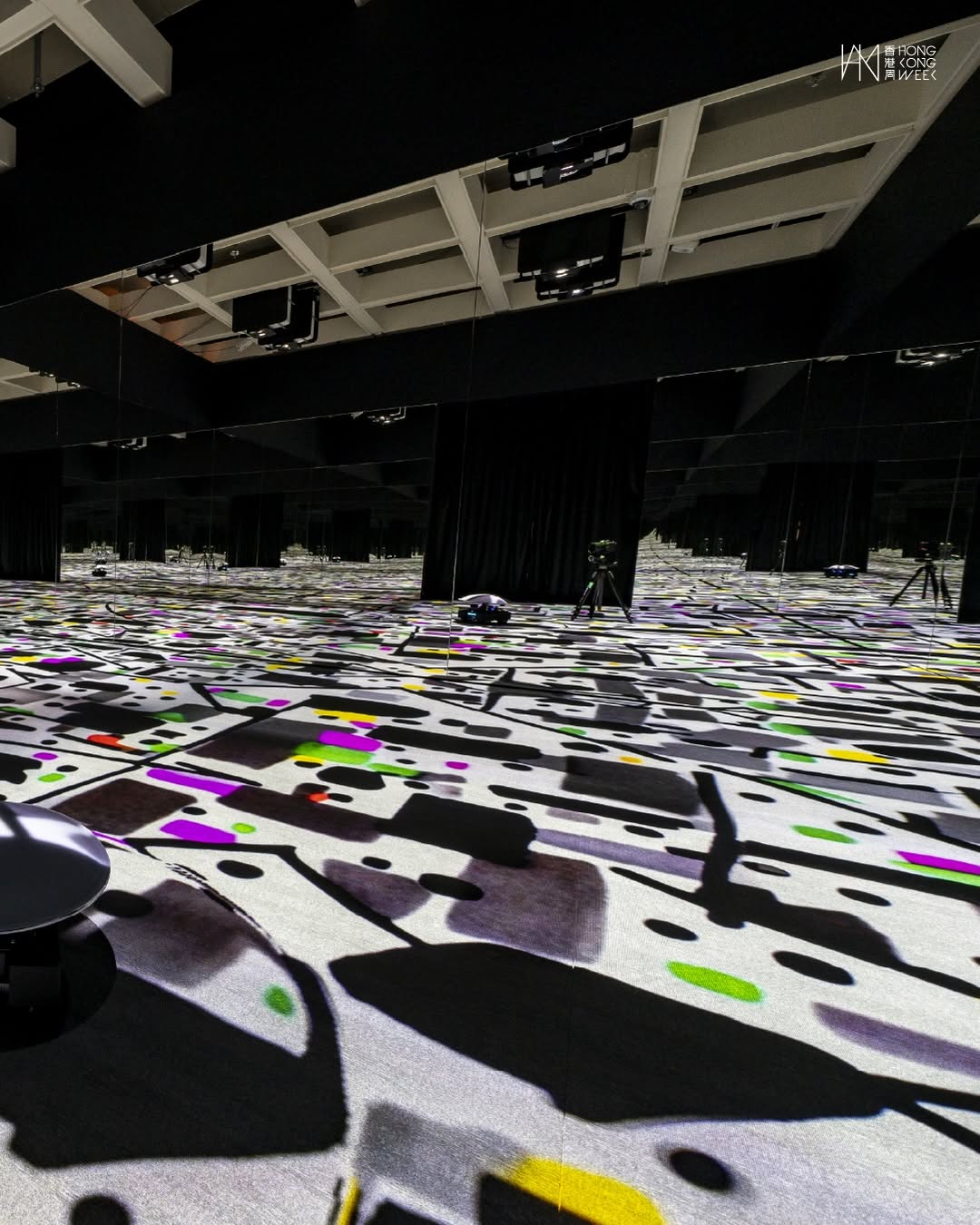

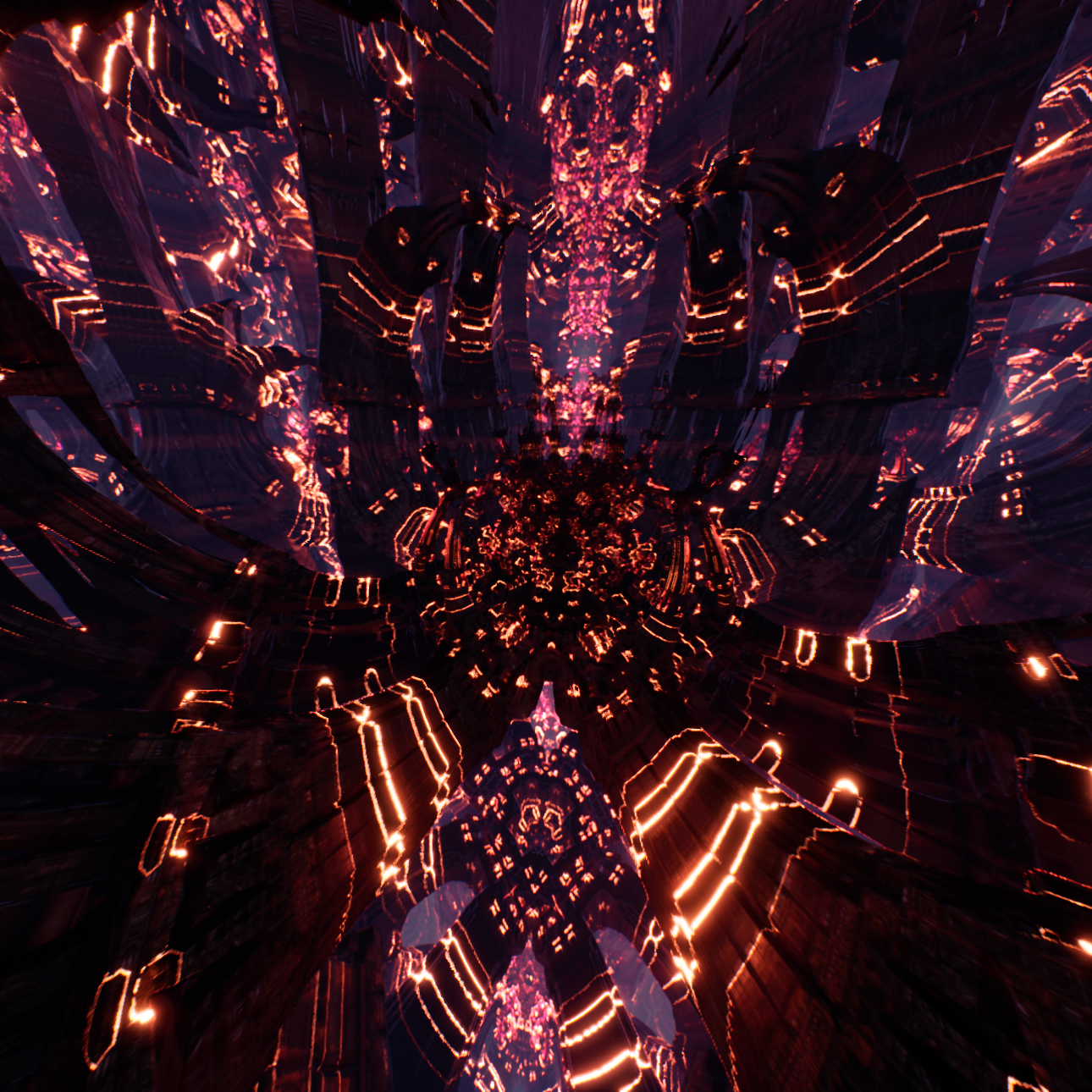

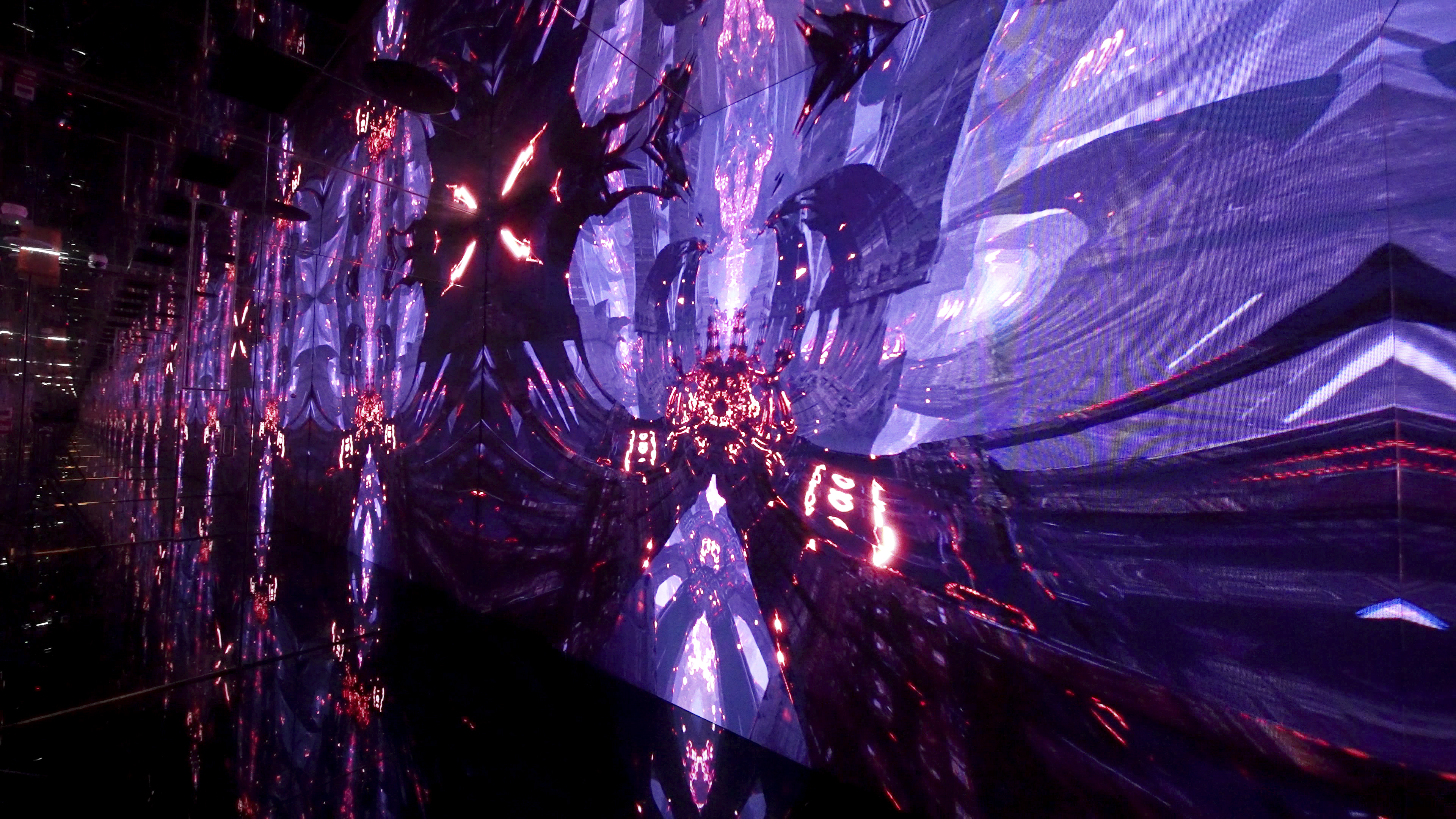

- Perpetual Records (2025): Visual Artist and Technical Designer for a site-specific audiovisual installation in a mirrored-room speakeasy; integrated artist Frog King's symbols as procedural morphing elements within the environment, reinterpreting them through the Akashic Records framework; developed a generative visual system in Unreal Engine using advanced shaders, recursive geometry, procedural textures, moiré patterns, dynamic refractions, and cymatics-driven particles; designed camera path to disrupt spatial orientation and enhance immersion.

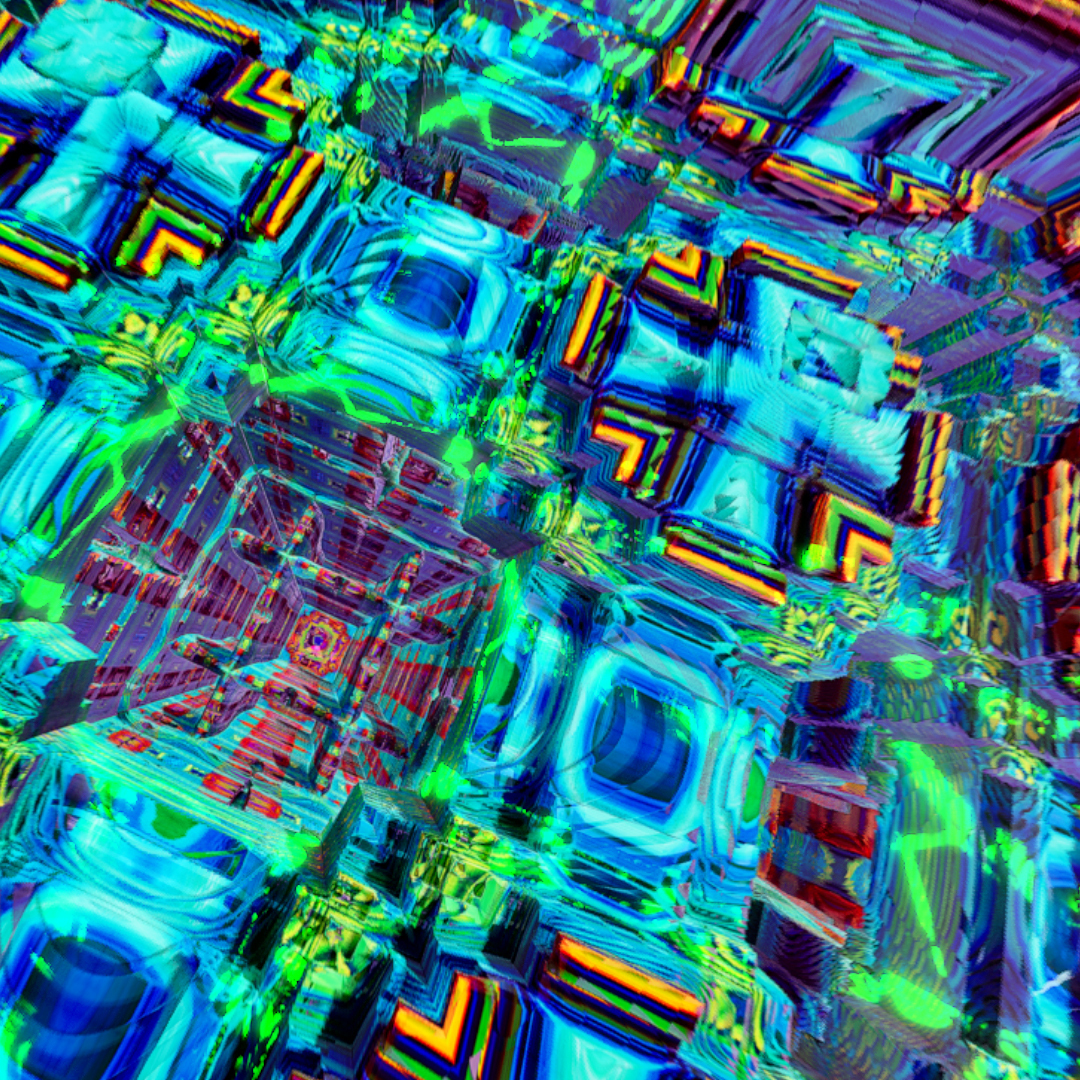

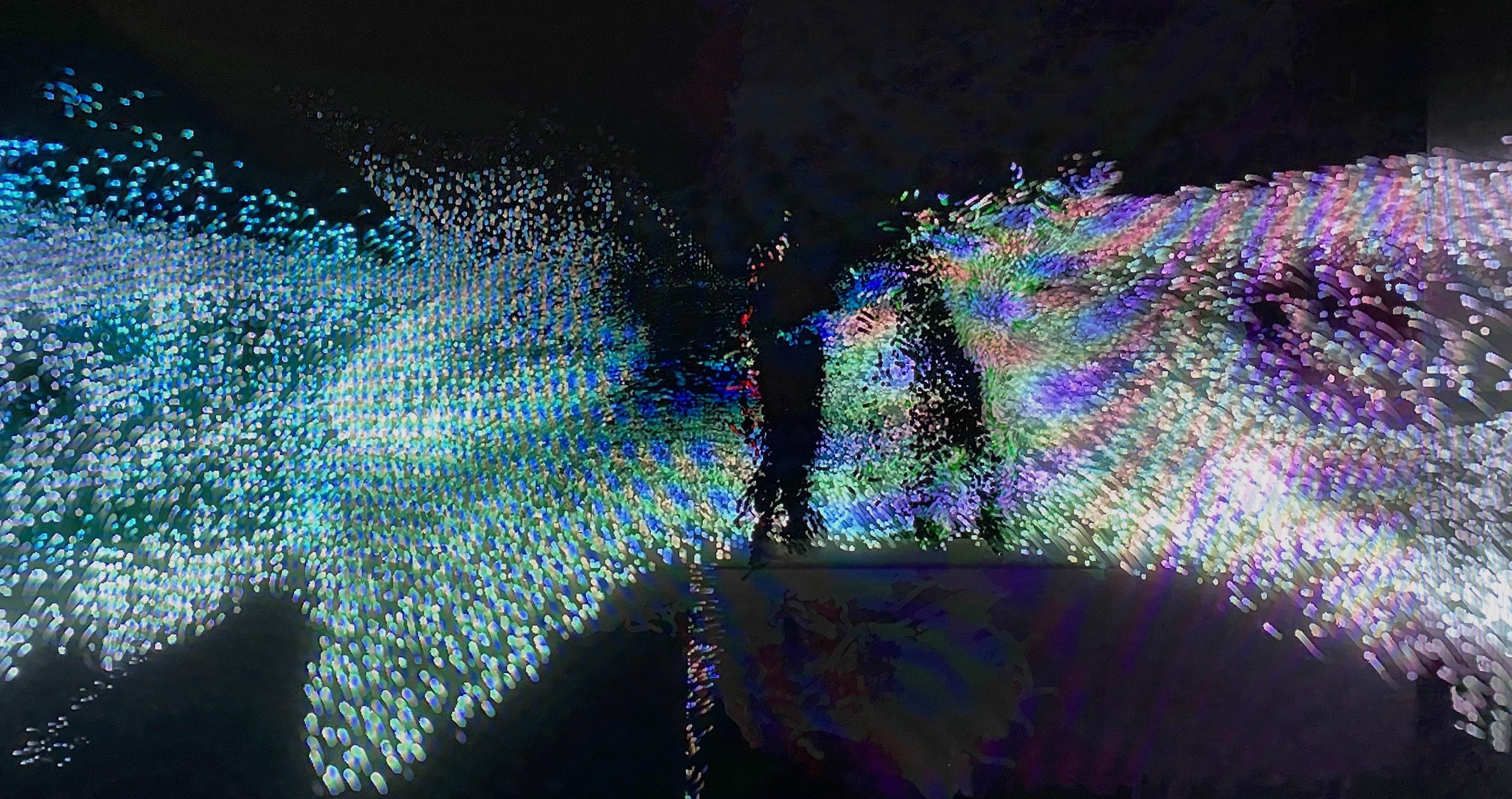

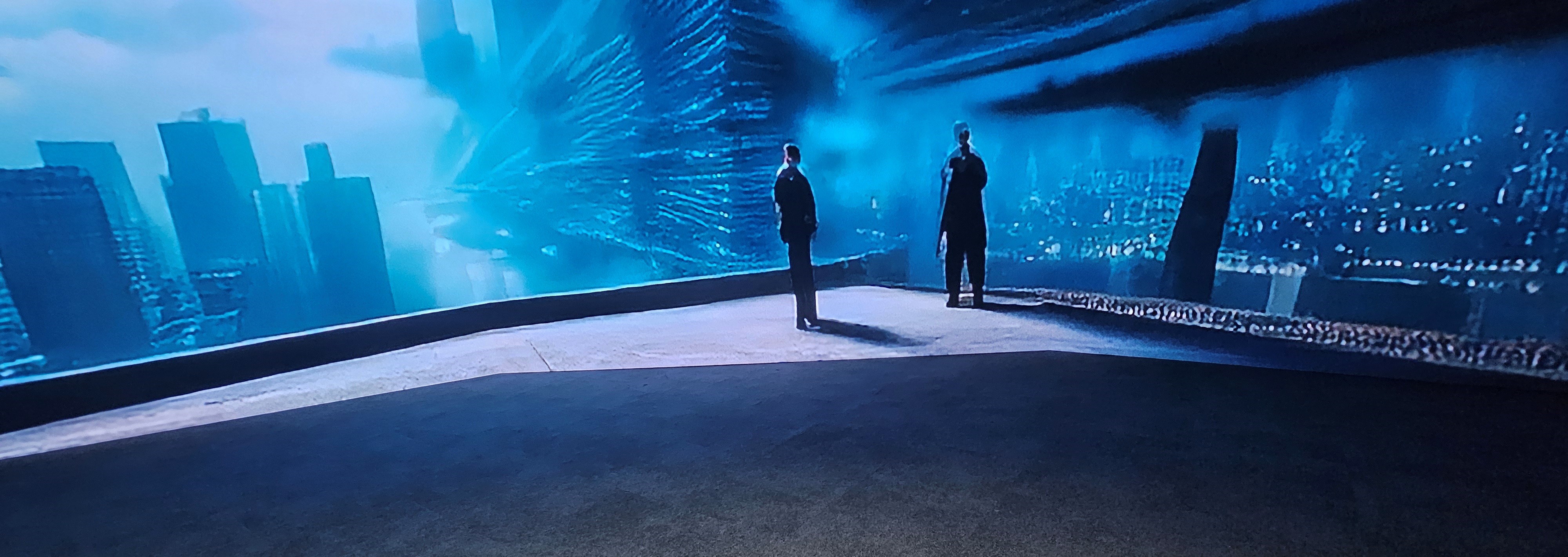

- Cinemorpheque (2024-2025): Co-developed an interactive installation for a year-long exhibition reaching HKMoA's (2+ million annual visitors), immersing audiences in Al-generated cinematic landscapes; programmed real-time projection of evolving visuals onto a large-scale LED wall using custom GANS trained on over 10,000 images; engineered integration of 3D body silhouettes captured via Kinect and tracking cameras with canny edges, segmentation maps, and depth maps; designed particle systems and interactive logic in TouchDesigner and StreamDiffusion to enable audience silhouettes to merge with and influence generative worlds.

- ChoreoMusica (2024): Developed audio-reactive visuals and engineered the master technical system for a concert of Piazzolla's Four Seasons; designed and built a Python-based master scene controller to integrate multiple artists' Touch Designer projects on a single machine, enabling stable cue-based transitions and preventing GPU overload during live performance; created visual segments using a Stream DiffusionTD-assisted pipeline and generative systems driven by custom mathematical particle networks.

- The Flow Pavilion (2025): Designed and built an end-to-end kinetic system translating meditation EEG brainwave data recorded at 10 Japanese temple sites into motion paths for a robotic sphere on custom silk carpet. Defined mathematical logic and modeled robotic motion as a function of multi-band EEG data, deriving the primary trajectory from Delta/Theta waves and path deviations from high-frequency activity. Applied smoothing via moving averages, spline interpolation, and gaussian filters to emulate Zen sand raking aesthetics. Adapted algorithms to robot constraints such as cornering, motor speed, and carpet resistance. Developed custom Touch Designer and Python modules; collaborated with robotics and hardware teams to validate and refine pavilion performance.

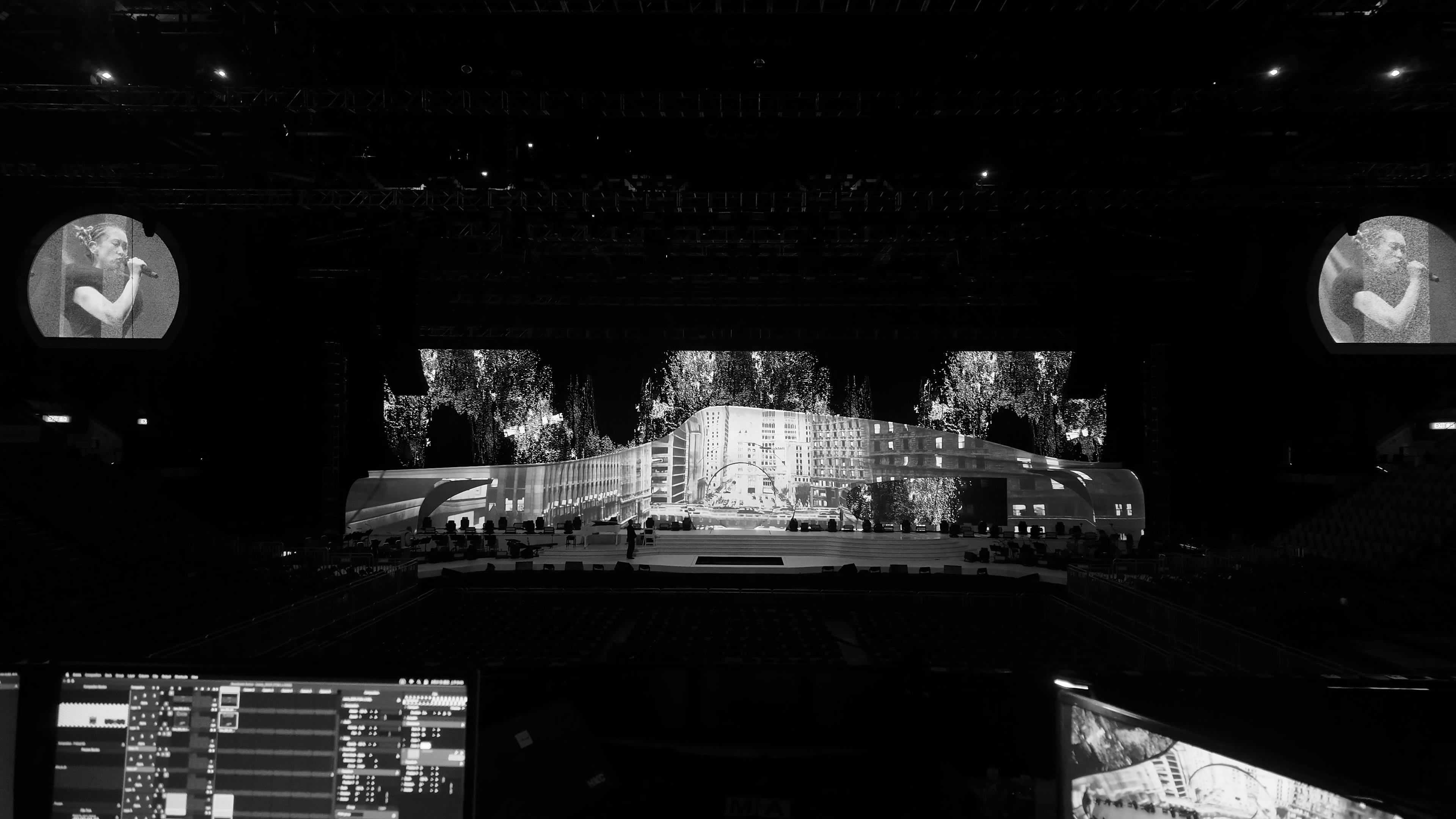

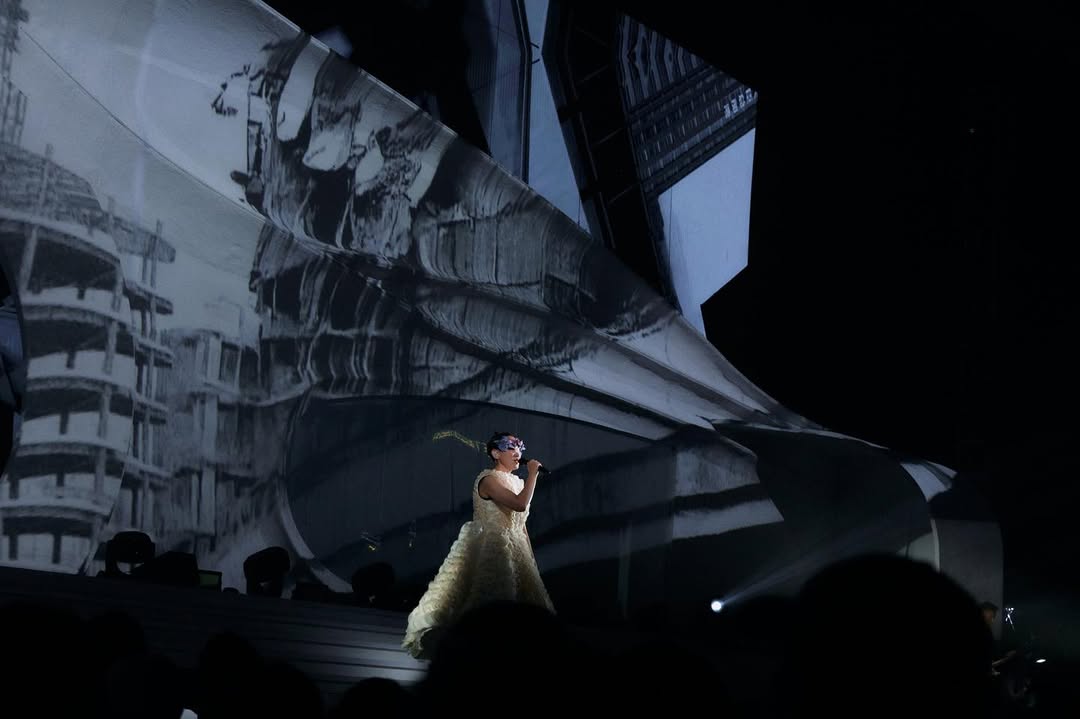

- Ivana Wong Concert (2025): Designed a Touch Designer-based audiovisual system for live concert visuals at the Hong Kong Coliseum, a 12,500-seat arena; created fish-eye distortions and integrated a textured point cloud model of Kowloon City Plaza; synchronized Touch Designer audio reactivity with client footage, incorporating particle overlays and displacement effects; collaborated with stage producers and visual artists to maintain technical compatibility and narrative coherence across multimedia elements.

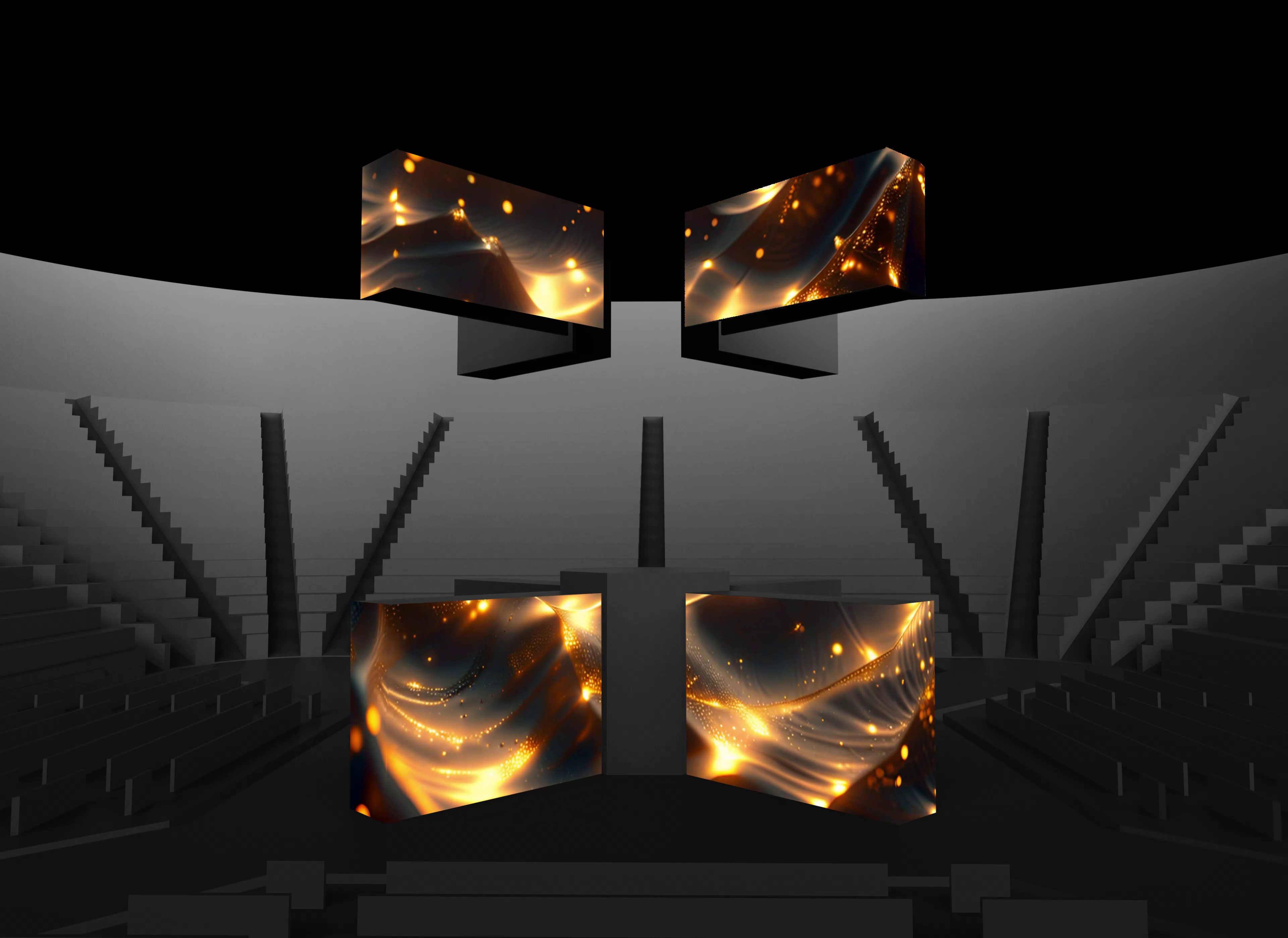

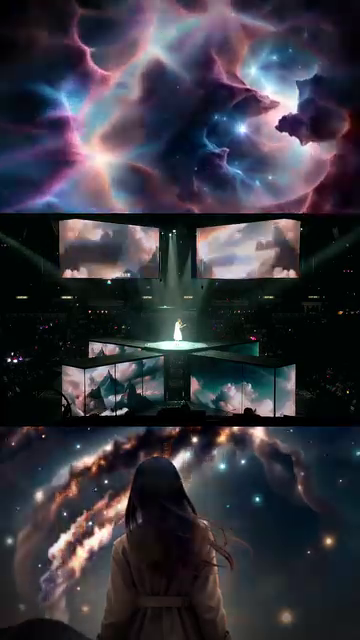

- Sammi Cheng Concert (2024): Developed Al-driven morphing animation sequences using AnimateDiff and ControlNet (OpenPose) for Sammi Cheng's 'A Time for Everything' performance at the Hong Kong Coliseum, a 12,500-seat arena; delivered zero-downtime performance across 90-minute live show; optimized visuals for large-scale LED projection; Employed outpainting and high-resolution upscaling techniques to ensure visual fidelity; delivered final assets adhering to strict VRAM and aspect ratio constraints; collaborated remotely with production teams to integrate visuals seamlessly into the concert's multimedia direction.

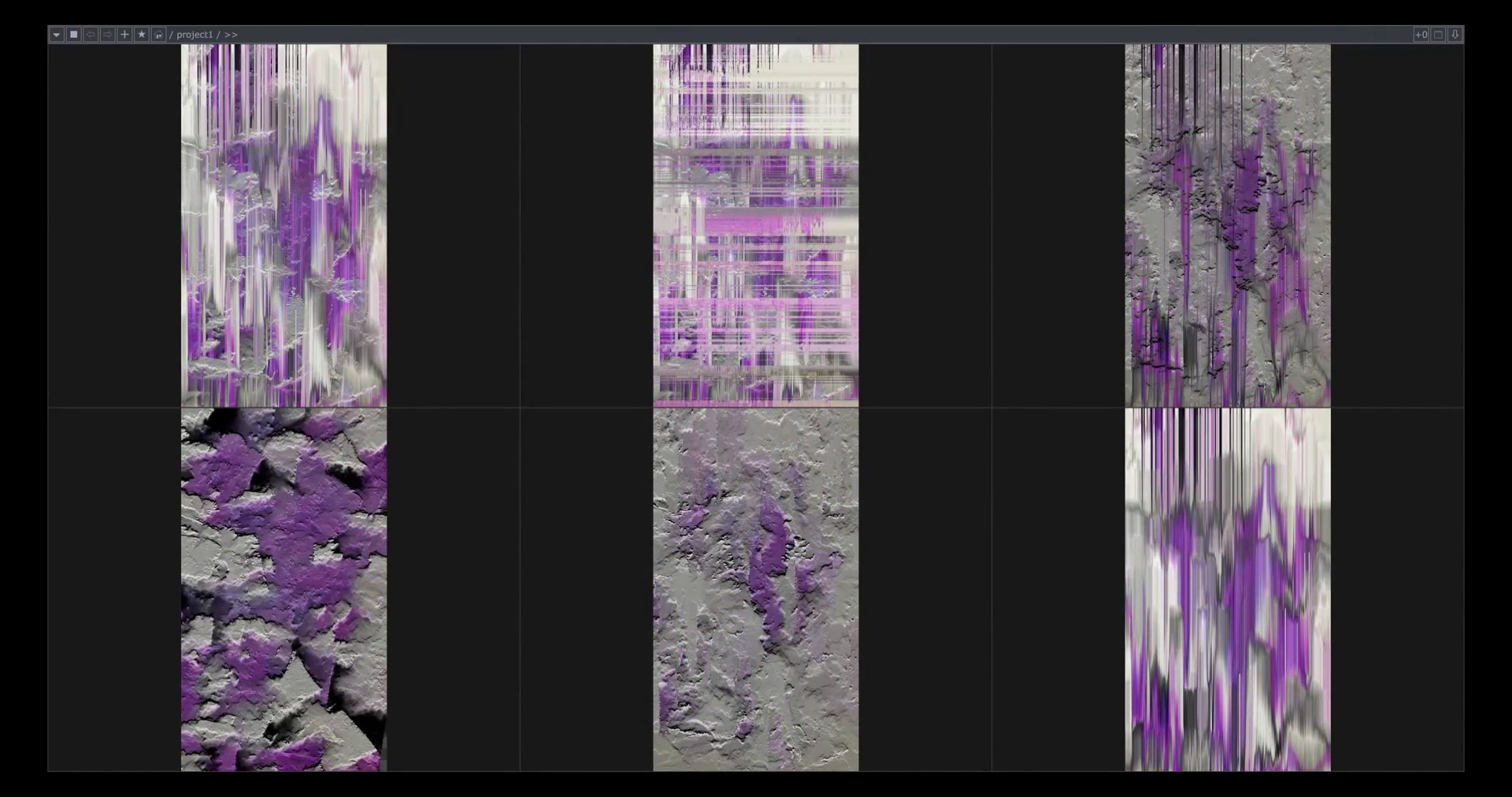

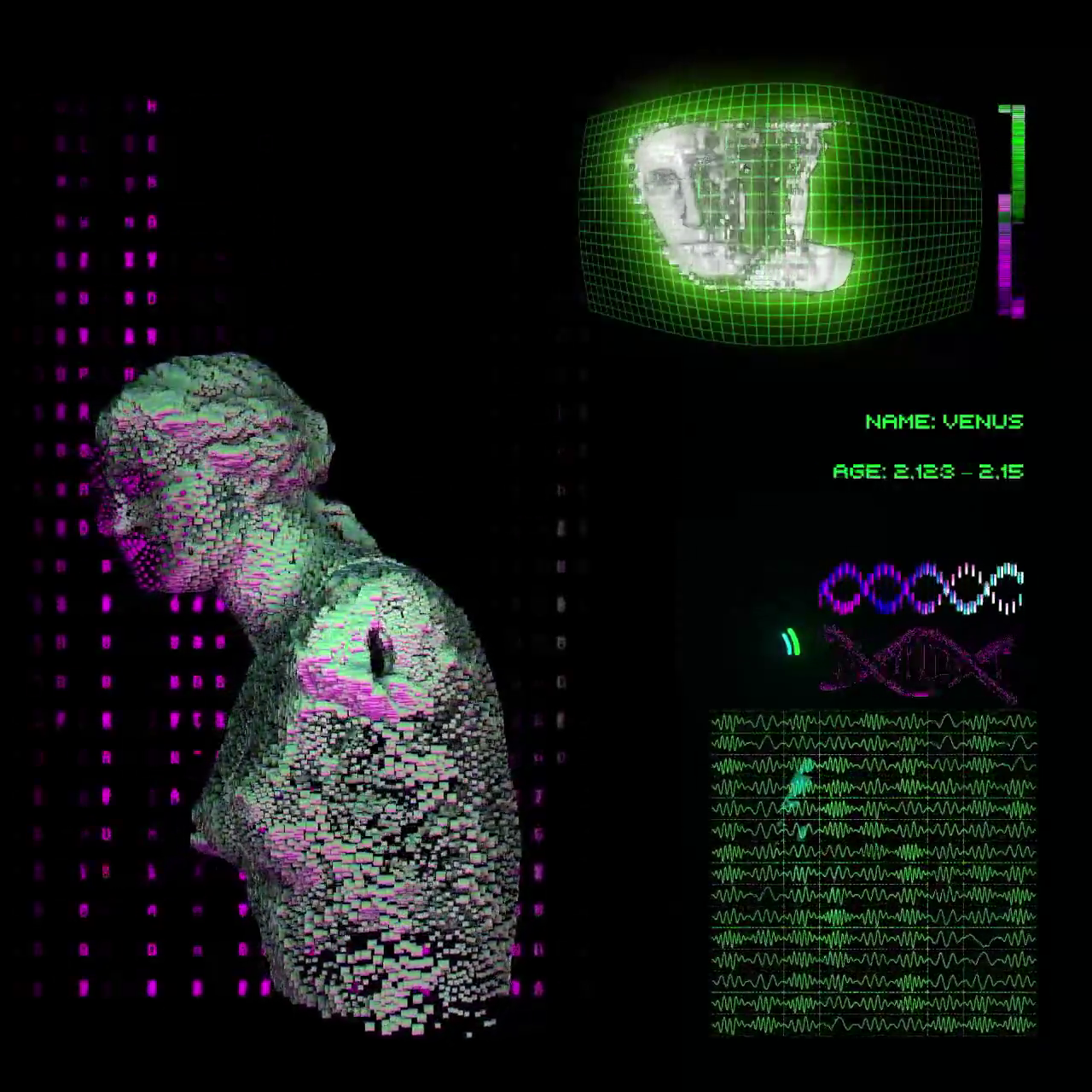

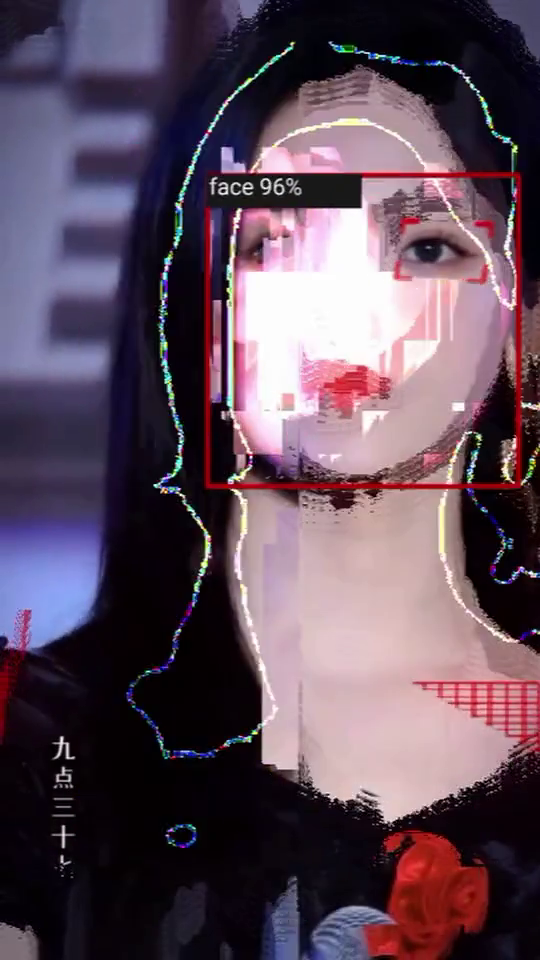

- FutureTense Festival (2024-2025): Developed core concept and produced complete visual identity for the festival awards' campaign theme, "define safe"; designed and built generative systems in TouchDesigner and MediaPipe; integrated real-time face and body tracking, thermal scans, and feedback networks to generate motion assets; delivered glitch-based transitions, pixel sorting, and Al-driven masking effects across multiple formats supporting branding and exhibitions; addressed thematic directions forming the event's core curatorial statement.

AI Gameplay & Asset Pipeline @ 3NS.ai, Remote (London)

May 2024 – June 2024

- Executed an R&D sprint for a Web3 Al game, prototyping core mechanics and establishing the generative 2D/3D asset pipeline (Blender, Stable Diffusion) in a remote, agile environment.

Independent work includes: building AR gesture interaction systems with findings published in the AR Antaeus journal; creating audio-reactive 3D visuals and Al animations; designing and developing experimental PC/Android games.

Education

Skills

Programming & Development

- Python, C#, Java, Node.js, HLSL/GLSL, Git

- PostgreSQL, SQLite, MySQL, TensorFlow, Keras

- NumPy, Pandas, OpenCV, openFrameworks, OSC, Cairo, Flask, REST APIs, HTML, CSS

Software & Tools

- Unreal Engine, Unity, Touch Designer

- Stable Diffusion (ControlNet, AnimateDiff), GANS, CLIP, MediaPipe

- Kinect, StreamDiffusion, NVIDIA FleX, Postman, React, Blender, Adobe CS, Figma

Spoken Languages

- Italian (Native)

- English (Bilingual Fluency)

- Korean (Professional Working Fluency)

Certificates & Honors

Gold Certificate of Acknowledgement, Introduction to Videogames Creation

Issued June 2023

Xamk South-Eastern Finland University of Applied Sciences

NYU Film and TV Industry Essentials Certificate (Awarded a Scholarship)

Issued December 2022

New York University (NYU)